-

Meta, formerly Facebook, is set to introduce invisible watermarks to all images generated by its AI, enhancing transparency and traceability.

-

This is part of its strategy to address concerns about AI-powered scams, deep fake content, and the broader challenge of misinformation on its platforms.

-

The resilient invisible watermarks, applied through deep learning, reflect its commitment to responsible AI practices.

Social media giant Meta, previously known as Facebook, is taking proactive steps to address concerns about the misuse of its Artificial Intelligence (AI) technology. In a report released on December 6, the company announced its intention to incorporate an invisible watermark into all images generated using Meta AI, the virtual assistant powered by AI.

This move is part of its broader strategy to prevent malicious actors from exploiting AI technology for deceptive purposes. The invisible watermark, “Imagine with Meta AI,” is expected to enhance transparency and traceability, making it more challenging for users to manipulate or misuse AI-generated content.

The watermarking feature will be introduced in the “imagine with Meta AI experience,” where users can generate images and content by interacting with the AI system. It plans to utilize a deep-learning model to apply invisible watermarks to these images.

Unlike traditional watermarks, easily removable by cropping or other manipulations, Meta claims its Artificial Intelligence watermarks will be resilient to common image alterations, such as cropping, colour changes, and screenshots. This robustness ensures the watermark’s effectiveness in maintaining the content’s integrity and preventing unauthorized use.

Applying invisible watermarks to AI-generated images aligns with its commitment to responsible and secure AI practices. The company recognizes the growing threat of AI-powered scams and deceptive campaigns, where malicious actors use AI tools to create fake videos, audio, and images, often impersonating public figures or spreading false information.

The move also addresses the broader challenges social media platforms face in curbing the spread of misinformation and deepfake content. By implementing invisible watermarks, Meta aims to provide an additional layer of security and accountability to the content generated through its AI systems.

RELATED; Meta Controversial Move: Dissolving Responsible AI Division Raises Eyebrows

Moreover, Meta AI’s latest update introduces the “reimagine” feature for Facebook Messenger and Instagram. This feature enables users to send and receive AI-generated images within the messaging platforms.

Incorporating the invisible watermark feature in these services extends the efforts to enhance transparency and traceability to a broader user base. its decision to apply watermarks to AI-generated content reflects a comprehensive approach to addressing concerns related to the misuse of AI technology and deepfake content on its platforms.

Recently, there has been a surge in the misuse of generative Artificial Intelligence tools, leading to concerns about the authenticity and credibility of digital content. Various instances of AI-generated content being used for scams, misinformation, and deceptive campaigns have highlighted the need for advanced safeguards.

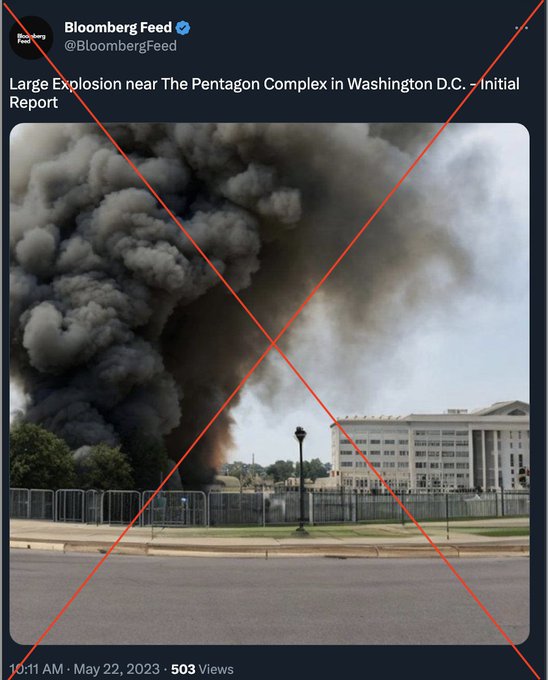

For instance, in May this year, AI-generated images depicting false events, such as an explosion near the Pentagon, caused temporary disruptions in financial markets. Its move to implement invisible watermarks is a proactive response to these challenges, aiming to give users more confidence in the authenticity of the content they encounter on its platforms.

Its emphasis on the resilience of its invisible watermarks against joint image manipulations underscores its commitment to staying ahead of potential adversarial attempts to bypass security measures. Traditional watermarks often face challenges in maintaining their integrity when subjected to various manipulations.

Its innovative approach leverages deep learning to create watermarks that can withstand alterations, adding a layer of sophistication to content protection. As the company plans to extend this feature to other services utilizing AI-generated images, it signifies an ongoing commitment to maintaining high standards of content integrity and user trust across its ecosystem.

The broader implications of its watermarking initiative extend beyond the immediate context of AI-generated content. The technology industry, in general, has been grappling with the ethical and security challenges posed by the rapid advancement of AI.

Its proactive measures to address these challenges set a precedent for responsible AI deployment and may influence industry standards. The company’s commitment to collaboration and transparency, expressed in its willingness to work with the FTX 2.0 Customer Ad Hoc Committee, demonstrates a dedication to ethical considerations in developing and deploying AI technologies.

In conclusion, its decision to implement invisible watermarks in AI-generated images signifies a significant step toward enhancing the security, transparency, and traceability of content on its platforms. The company’s commitment to addressing concerns related to the misuse of AI technology aligns with broader industry efforts to establish responsible AI practices.

As AI continues to play a crucial role in shaping digital experiences, its proactive approach sets a positive example for leveraging advanced technologies responsibly. The deployment of invisible watermarks represents a forward-looking strategy to stay ahead of emerging challenges in the evolving landscape of digital content and AI-driven interactions.