-

Introducing a new AI approach that mimics human decision-making to combat educational AI cheating.

-

Large language models (LLMs) for assignments threaten academic integrity as cheating becomes more common.

-

Agentic modelling provides advanced detection capabilities to maintain academic integrity and preserve essay writing value.

As a new academic term begins, students and educators prepare for another learning, growth, and discovery cycle. However, the rise of AI cheating has cast a shadow over the education system, raising concerns about academic integrity.

While technology has brought numerous benefits to education, it has also introduced challenges that require innovative solutions. Enter agentic modelling, a cutting-edge approach to AI that promises to combat cheating and enhance the capabilities of next-gen AI systems.

Agentic Modelling: New AI Modelling Boosts the Power of Next-Gen AI Systems

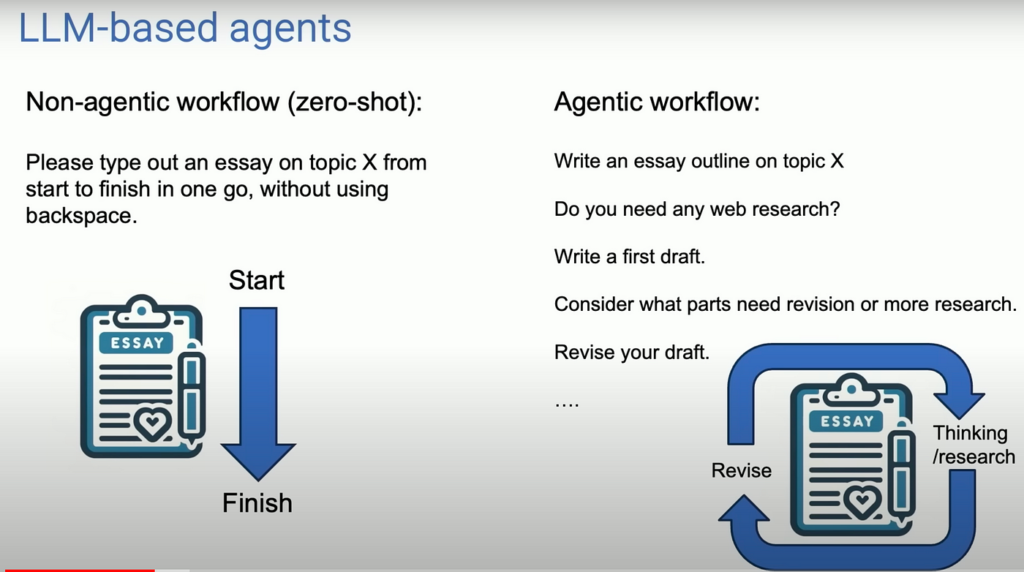

Agentic modelling is an advanced AI framework focused on agency and decision-making. Unlike traditional AI models based on predefined rules, agentic models simulate human-like decision-making processes. This allows them to adapt and respond to complex scenarios in real time, making them highly effective in dynamic environments.

Traditional AI models rely heavily on pattern recognition and data analysis. While these methods are powerful, they often lack the flexibility to handle nuanced situations. On the other hand, agentic modelling incorporates elements of cognitive psychology and behavioural science, enabling AI systems to understand context, make informed decisions, and even predict human behaviour.

The Current State of AI in Education

AI has become integral to education, offering personalized learning experiences, aiding in administrative tasks, and detecting plagiarism. However, the same technology has also facilitated cheating, where students use AI tools to complete assignments, undermining the quality of education and posing a threat to academic integrity.

University professors are increasingly concerned about students’ adept use of LLMs like GPT-3 for writing and other academic tasks. The landscape has evolved into an arms race between AI-driven cheating and detection methods. Despite efforts to develop reliable detection systems, the challenge remains formidable.

For instance, OpenAI has developed a method to detect AI-generated content but has yet to release it, citing limitations and ethical concerns. The Association for Computing Machinery (ACM) has stated that detecting generative AI content without an embedded watermark is beyond the current state of the art.

Agentic Modelling in Addressing AI Cheating

Agentic modelling offers a promising solution to the issue of AI cheating. By simulating human decision-making processes, these models can more accurately detect anomalies in student work that may indicate AI assistance. For example, an agentic model could analyze the progression of a student’s writing style over time, identifying sudden and unexplained changes that suggest external aid.

Also, Read Transform Learning Environments with Meta’s Cutting-edge VR Education.

Consider a hypothetical scenario where a student’s writing significantly improves overnight. An agentic model could flag this as suspicious and prompt a review. Similarly, the model could detect this disparity if students use complex vocabulary and sentence structures inconsistent with their previous work.

In another case study, an educational institution implemented agentic modelling to monitor online exams. The system successfully identified several instances of cheating by analyzing behaviour patterns, such as rapid, uniform responses indicative of automated assistance.

Implications for the Future of Education and Academic Integrity

The long-term implementation of agentic models in the education system holds significant promise. These models can help maintain academic integrity by providing more robust mechanisms for detecting and preventing AI cheating. However, their use must be balanced with privacy considerations and ethical AI development.

Educational institutions must adopt ongoing development and oversight practices to use these models responsibly. This includes transparent policies on data usage, regular audits of AI systems, and continuous updates to address emerging threats.

Essay Writing and Large Language Models

Essay writing is a fundamental component of humanities education, serving as a tool for assessing students’ critical thinking and communication skills. However, the rise of large language models (LLMs) has complicated this traditional method. Students can now use LLMs to generate essays, raising questions about originality and effort.

Also, Read Vietnam’s Blockchain Education Enhanced by Tether’s New Strategic Partnership.

Agentic modelling can help maintain academic integrity in this context. These models can differentiate between genuine and AI-generated content by analyzing the intricacies of a student’s writing process. This approach not only preserves the value of essay writing but also encourages students to engage deeply with the material.

Educators must rethink and adapt their teaching methods to address these challenges. Viewing LLMs as “cultural technologies” for augmentation rather than replacement can help integrate these tools into the learning process. Encouraging students to value the process of writing over the product fosters intellectual development and creativity.

Josh Brake, an academic who advocates for engaging with AI in the classroom, emphasizes the importance of writing as an intellectual activity. He argues that if students understand the value of the writing process, they are less likely to outsource their work to AI. This perspective aligns with the broader goal of using agentic modelling to enhance, rather than undermine, educational outcomes.

Conclusion

Agentic modelling represents a significant advancement in the fight against AI cheating and preserving academic integrity. These models offer a more nuanced and practical approach to detecting and preventing cheating by mimicking human decision-making processes.

The future of education will undoubtedly involve advanced AI systems, but their development must be guided by ethical considerations and a commitment to enhancing learning outcomes. Ongoing research and discussion are essential to ensure that AI technologies continue to serve as tools for human augmentation, not replacement.

Educators, students, and AI developers must collaborate to create a balanced and effective educational environment as we move forward. Through innovative solutions like agentic modelling, we can harness the power of AI while safeguarding the values that underpin our education system.